How to Use AI Effectively & Responsibly in Research

The more we use AI, the more we realize its potential for opportunity and risk. Check out these tips for using AI responsibly in research.

By now, we all know AI is a powerful tool for efficiency and scale across research workflows. But the more we use it, the more responsibility we must take.

Responsible AI requires thinking through the potential pitfalls and proactively mitigating them.

“It’s about repeatedly asking the questions — what could go wrong? Who can be harmed? How?” said Mihaela (“Mickey”) Vorvoreanu in a conversation about the road to responsible AI. (Mickey is the director of UX Research & Responsible AI Education at Aether, Microsoft's research and advisory body on AI ethics and effects, where she has a front-row view on what it will take to get there safely.)

Here's how Mickey and other leading UX researchers are approaching responsible AI, so you can, too.

Create a Policy for AI Usage

Your team can’t stay within the guardrails if you don't have any. No matter where your organization is in your AI adoption, you'll want to take a giant step back and create a Responsible AI policy.

Mickey and her Microsoft colleagues developed this Responsible AI Maturity Model, which can act as your guiding light. The tool is designed to be used in strategic planning workshops to develop responsible AI policies, processes, and infrastructure.

In the same workshop, gain insight into how your team uses AI now. What’s working, what’s not, and what concerns have come up?

From there, create a formal policy that clearly defines your company’s AI stance. At the same time, make sure it’s flexible enough that anyone could adapt it to their specific role and responsibilities.

Each year, revisit the policy to ensure it stays relevant to how AI is currently being used and any new concerns that arise.

Adhere to Data & Privacy Requirements

Be aware of data storage locations and compliance requirements for research projects.

For example, if you’re partnering with a government agency, you may not be allowed to upload data into a platform or store meeting transcripts.

“If you do work for them, you have to revert back to traditional methods — and it’s so much longer," said Dan Lemmon, Research Manager at The Social Agency.

No matter who you work with, it’s important to know your AI tools’ security certifications. For instance, HeyMarvin achieved an ISO/IEC 42001 certification, the international standard for responsible AI management. This recognition also complements our existing ISO 27001, HIPAA, GDPR, and SOC 2 frameworks.

“Our customers trust us with their most valuable customer feedback and market insights, and they deserve independent proof that our AI is built responsibly,” said Prayag Narula, Co-Founder and CEO of HeyMarvin.

Maintain Human Oversight & Critical Thinking

Choose specific team members to set up and monitor AI systems, especially for complex or sensitive research tasks. Putting one person in charge creates a sense of ownership and accountability. This person should oversee the channel and ensure its usage follows internal AI policies.

In addition, there should always be a human-in-the-loop when it comes to reviewing AI output. The person prompting AI should also be responsible for reviewing the results to ensure accuracy and business relevance.

"Make sure that you're not just taking what is being created at face value," said Janelle Estes, Bentley University’s Experience Design Platform Director.

There are also instances when AI shouldn’t replace human judgment, such as interpreting findings and making strategic decisions.

Train Your Team on AI Protocols

Allocate time to explore and assess new AI tools. Once you find the right ones, make sure your team is properly trained on how to use them. Anyone can ask AI a question and get an answer. But crafting a prompt that returns a result that’s relevant to your business goals? That’s an advanced skill.

“Generative AI is just like your smart external consultant that can only give you feedback or insight based on the context that you've provided it," Janelle said.

Once you’ve trained your team, they can use AI to take on some of the more repetitive administrative tasks, freeing up their time for critical thinking and strategy. This presents a big opportunity, especially for junior researchers.

"It closes that gap between a senior researcher and a junior researcher in terms of what they actually spend their time doing,” Dan said.

Be Transparent About Your AI Process

One of the most important aspects of AI is transparency. Make participants aware of how AI plays a role in your research process. Maybe a chatbot will moderate the interview or transcribe notes. Let them know what to expect in terms of AI interactions.

Also, make sure they know how you’re handling their data (e.g., stored in a secure research repository). This shows participants they can trust you because you handle their information with care.

Responsible AI Requires People, Not Just Tech

It’s up to all of us (not just the companies that build technology) to work toward responsible AI.

In the beginning, it was hard to know which the best AI tools to pick, what to do with them, and when. Now, our understanding of AI and our skillset with using it has evolved. We’re no longer novice users.

As our understanding of AI expands, it’s our responsibility to strengthen our ethical use of it by asking, “Are we using AI in a way that reflects company values?”

Want to learn more ways UX researchers are using AI? Explore our guide, “The Modern Research Workflow.”

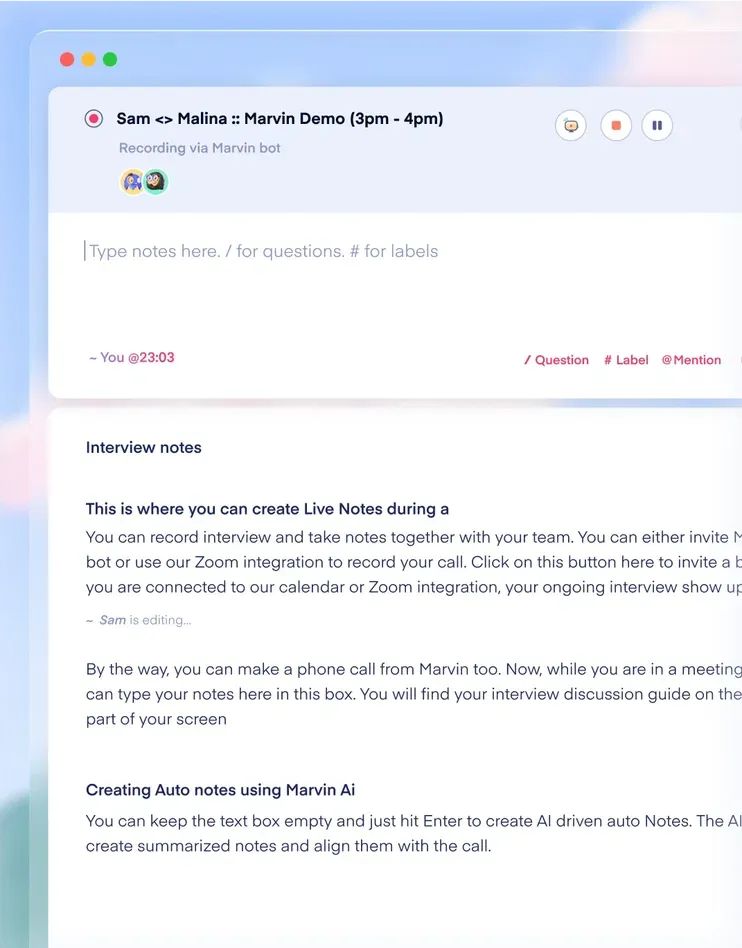

See Marvin AI in action

Want to spend less time on logistics and more on strategy? Book a free, personalized demo now!

.svg)