Why ‘Research Slop’ Is a Technology Problem

Learn why better, dedicated AI and data pipelines are the solution to high-quality, high-volume research.

With AI everywhere, I’m seeing a lot of panic about “research slop,” a wave of low-quality, high-volume research outputs lacking the depth and rigor that displaces good, in-depth research. In my view, blaming AI for research slop misses the point. It’s an easy argument that hides the real issue: People are using low-effort, unspecialized AI to do work that requires either research-specific tools or sophisticated data pipelines.

This is not an AI problem; it’s a tooling problem. Many people are using the wrong tools for the wrong use cases. Effective research requires AI that’s built for a large context window, tied into real data pipelines, and shaped by the way researchers actually work. This requires working with specialized tools or sophisticated data pipelines — or both. When you get that part right, AI can enable both high-quality and high-volume research.

The solution to “research slop” is not to use less AI, but to use better, more specialized AI.

Busting The Myth of High Volume = Low Quality

Another common misconception is that high volume by definition leads to low-quality research. Or at the very least, it displaces high-quality research.

In reality, volume and quality aren’t opposites. In fact, I would argue that we are not even close to reaching the optimal volume of research the world needs. The real need of the hour is 10-100x more research.

Consider how many product, marketing, pricing, or strategy updates launch without any input from users, customers, or stakeholders. What if we could magically back all decisions with research? How much impact could we drive? Now, consider this: How many decisions in your orgs are actually driven by research? There isn’t enough research being done today to get displaced.

The real displacement challenge is not in the creation of scaled research, but its consumption. Companies (like individuals) have limited attention spans and can only consume a fixed amount of information. Outputs need to be actionable, digestible, and tailored to stakeholder needs. Guess what — the right AI tools can help. When those pieces are in place, AI doesn’t cheapen research; it amplifies its value.

It’s also worth remembering that not every research question calls for a months-long deep dive. Sometimes “good enough” is exactly what the situation calls for. AI helps researchers tighten timelines and get the answers they need with less manual effort, freeing up resources for the deeper work where it’s really needed. Low-effort research has always existed; AI just makes it more efficient.

Context Is King and Requires the Right Tools

I am not an AI maximalist. I build AI products for a living and use LLMs every day. I am very aware of LLMs’ limitations. And LLMs do have real and serious limitations.

The most frustrating problem that LLMs have is the weird way they use the context provided to it. We all know that more context can help the LLM provide better, more nuanced results. But the context window isn’t infinite. And when you have a large amount of context, the LLM can collapse around a few data points. The context size where this happens isn’t very large. Even though some LLMs can technically accept large context windows, they tend to forget what’s in the middle and only focus on the input at the beginning and end of the context window. This is called the “lost in the middle problem,” and the only current solution is to build data engineering around it. Even when you work around this problem and force the LLM to use hundreds of thousands of tokens for its analysis, the generalities in which it speaks might be too vague to provide real information.

My point is not that these flaws don’t exist, but that they are mostly mitigated with sophisticated data pipelines, context chunking, and prompt chaining via agents. And lest we forget, problems of overwhelming context are not unique to AI. Human researchers struggle when they are overloaded. How often have researchers been criticized for stating the obvious? Experienced research leaders are all too familiar with narrowing the scope of our teammates’ projects to get actionable results. Wrong tooling with too broad a scope is not AI slop; it’s a user error.

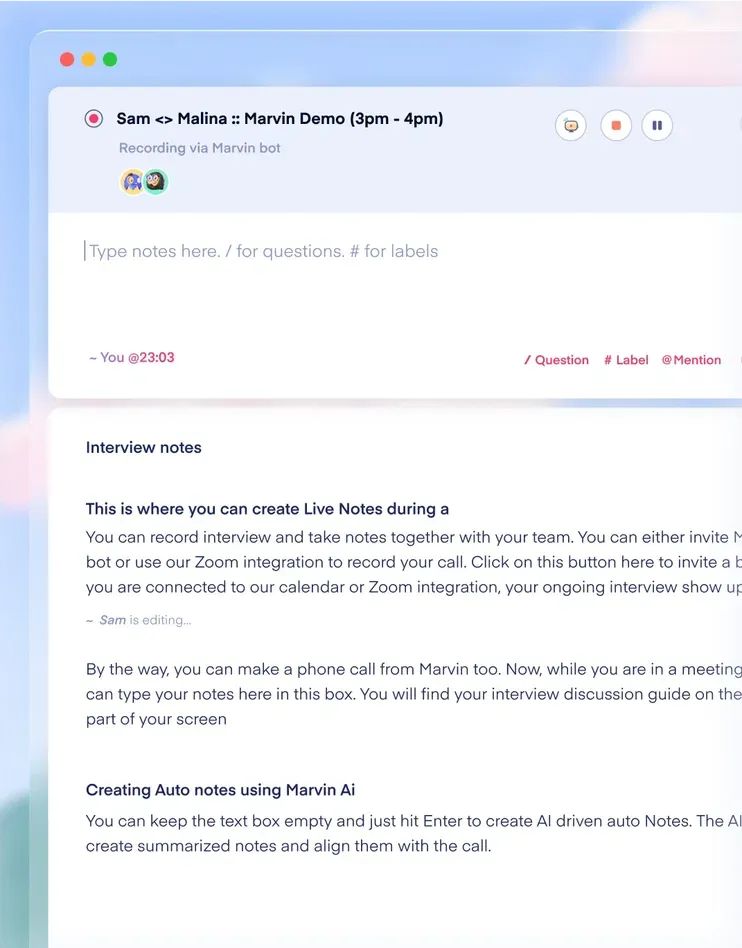

We can empower researchers by giving them tools built for the way they work, rather than expecting generic chatbots to moonlight as research platforms. This requires investment in data pipelines, context management, and tools that understand research workflows instead of just flattening them. One of the clearest examples of this is when you need to analyze data from thousands of interviews, surveys, and other research artifacts. Purpose-built tools (like Marvin) don’t have the same context restrictions as general LLMs. In fact, they were built to enable large-scale, complex analysis within minutes, providing references back to the source materials for further interrogation.

We also have to be honest with ourselves about the limits here. AI will never fully replicate the nuanced, interpersonal understanding that experienced researchers bring to the job. What AI can do when it’s configured properly is support the mixed-method approaches and stakeholder-specific outputs that researchers need to elevate their work.

AI Is a Practice, Not a Technology Problem

Low-effort research exists with or without AI. Ultimately, the challenges masquerading as research slop are issues we face with the craft being democratized: lack of transparency, weak bias checks, and stakeholder misalignment. These are not unique to AI, nor are they caused by it. If anything, the right tools can help us tackle these issues in new ways by making our work more transparent, auditable, and easy to digest and self-serve.

Researchers should always acknowledge the tools they employ and any biases in their data or workflows, including AI tools. The advantage now is that AI can help to surface and track these biases rather than burying them. The increased accessibility of research is a positive trend, and the practice should lead from the front — rather than retreating from the technology.

The solution to research slop is not to refuse to use AI. Everyone in the org needs to advocate for the right technology and resources to conduct better research that drives organization-wide decisions. Thanks partly to AI, we are at a cusp where companies realize that a deeper understanding of our customers (especially through qualitative research) is a major differentiator. We have a lot to teach the world about our craft, and we need to tap into our superpowers rather than turn away from a new technology that — whether we like it or not — will continue to capture the zeitgeist for a long time.

Thank you to Jess Holbrook, an incredible leader in our space, for sharing his indispensable feedback on this article. Thank you also to Cari Murray for providing editorial guidance and vision.

–

Want to learn more about our favorite specialized AI tools? Request a demo of Marvin!

See Marvin AI in action

Want to spend less time on logistics and more on strategy? Book a free, personalized demo now!

.svg)